When we talk about digitalization, the most important question is simple: what is already working in newsrooms and where is it having a tangible effect?

The third mentoring session of the National Union of Journalists of Ukraine (NUJU) course titled Strengthening the Resilience of Frontline Media, conducted by journalist and media coach Andrii Yurychko, presented practical cases from automated financial reports to internal editing tools. Below, you can find a brief and links.

- Who uses LLM in newsrooms and why

- What the audience thinks (and why transparency is critical)

- What exactly not to do

- How to reproduce the main points from the training

Who uses LLM in newsrooms and why

Today, language models are primarily about routine processes and scaling: transcription, draft creation, quick summaries, SEO headlines, translations, archive searches, and code assistance for product teams.

Larger news organizations also adopt public policies: what is allowed, how to label content, and where a ‘human in the loop’ is needed.

AI in news agencies is not used to publish ready-made content or images, but rather to generate ideas, edit, etc. — always under the journalist’s responsibility (see AP recommendations on generative AI).

“First of all, this is the creation of drafts and ideas using LLM. Many of the publications I am proud of were born precisely through communication with a language model. For example, I offer a topic and ask for options on how best to cover it for the audience. It can give dozens of options – and this is inspiring,” said media coach and journalist Andrii Yurychko.

Since 2014-2015, the Associated Press has been automatically generating thousands of short notes on quarterly financial results based on structured data. This has increased the coverage of topics many times over and freed journalists from monotonous routine.

How it works: data → templates → editor control.

AP publishes standards and limitations for generative AI: everything that goes “on air” is reviewed by a human and has a transparent source.

The BBC is testing (and now gradually launching) short At a Glance summaries under materials, as well as the Style Assist tool, which helps authors with the style and clarity of their texts. A separate direction is BBC News Labs, which reformats content for different platforms in the form of graphic storytelling.

All this works only as a hint, with mandatory human editing and clear labeling.

The principle of operation: “human editor + transparent labels” and restrictions on sensitive topics.

Graphical storytelling is a modern direction in journalism that uses visual formats (animation, comics, infographics, interactive maps, or videos) to attract a younger audience that consumes news mainly through social networks.

The goal is to convey the main meanings quickly and emotionally, reducing the “entry threshold” for complex topics, such as social or scientific.

The New York Times officially trains the editorial staff to work with AI through its internal Echo tool – for editing, summaries, SEO headlines, and even code. At the same time, the publication warns that AI should not write or significantly alter articles. That final control always remains with the editor.

Even before the LLM boom, Reuters developed Tracer – a system that analyzes millions of tweets, determines the “newsiness”, reliability, and geography of events, and generates short summaries for journalists. This is an example of AI infrastructure that does not replace the journalist, but gives him an advantage in time.

According to the Digital News Report 2024, only 36% of respondents are comfortable with news created “by people with the help of AI”.

The conclusion is obvious: labeling and explaining the role of AI are mandatory; otherwise, trust levels decrease.

Read more in the Reuters Institute report.

What not to do

- Publish “pure” AI texts or images without human verification and labeling.

- Consider the model as a “source of truth” in legal, medical, or political topics.

- Hide the use of AI: trust arises when you openly explain the role the tool played.

- How to repeat the main points from the training

How to repeat the main points from the training

During the mentoring session, Andrii Yurichko demonstrated how a language model can help fulfill a fairly common journalistic request: converting recorded video or audio into text. To do this, you can upload the recorded video to your YouTube channel for personal use (with the “do not distribute” or “distribute only by link” checkbox checked). Next, copy the video link and convert it to audio on the cnvmp3 website. Note that speech models do not need high-quality sound for analysis, so 96 kbps will be quite enough. The finished audio file can be downloaded for the speech model to decode.

Another service that can help here is https://123apps.com/, which offers a wide range of applications, including JPEG-to-PDF conversion, PDF-to-Word conversion, archiving, video trimming, etc.

Journalists also practiced prompts for checking news stories to find the original sources, confirm facts, and establish a human quote.

“It was interesting to see how speech models search for original sources and try to check each fact. They show good results, but only with information that is already indexed by search engines,” notes Andrii Yurichko. “Sometimes the models indicated incorrect links, redirected to inappropriate sources (because the search keywords coincided). In practice, a journalist with minimal search-engine skills can easily do all this, but the advantage of language models is that they significantly reduce search and verification time. By the way, many news sources were in personal social media posts, which the web resource did not report but presented as its own verified information.”

That means language models significantly reduce the time required to search for and verify information, even for journalists with only basic skills.

Why does a language model “slow down”, or what are tokens?

All language models work with tokens. A token is information, a unit of text. Words are broken down into tokens, and the model has a limit on the number of tokens. If we talk about the Ukrainian language, on average, three words are four tokens. In addition, we must consider the following point: all language models operate in English. When you give a task, it first translates it, performs it in English, and then translates it back to you. And all this is accounted for in the number of tokens. The entire process of “thinking” is also included in the number of tokens. For one request, say, the GPT chat uses about 4,000 tokens (this is about 700-800 (maximum 1,200) words in the answer). If you give it more volume, it simply will not analyze it or will analyze it in torn pieces to fit into the limit. And suppose your “conversation” goes beyond the chat limits (32,000 or 64,000 tokens). In that case, the model simply “forgets” what you “talked” about at the beginning.

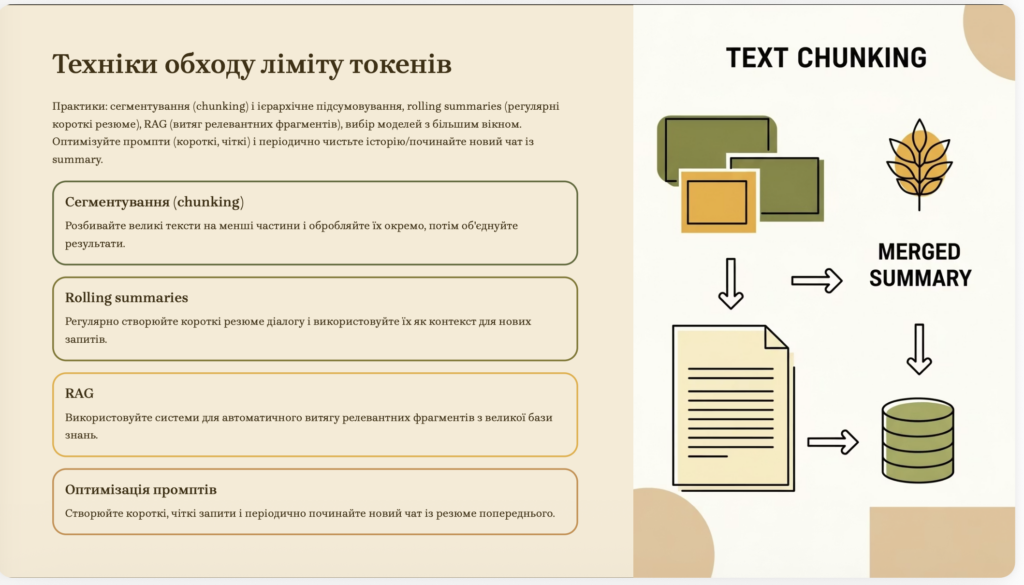

To bypass the token limits, Andrii Yurichko advises segmenting the task for language models into parts. And for new requests, use short summaries from previous dialogues. It is also important to clearly and briefly set the task.

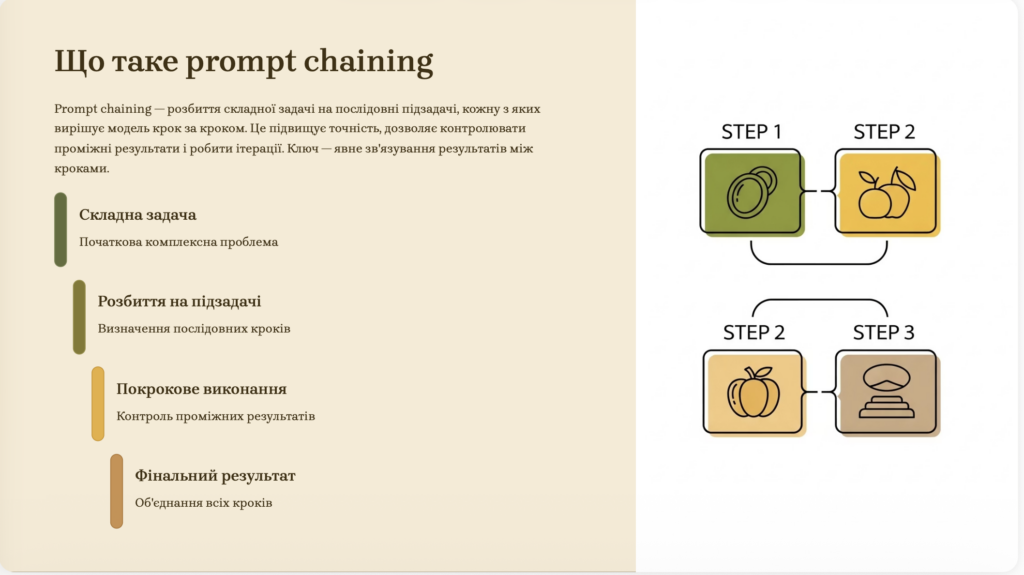

Components of an effective prompt:

- Role (who answers). For example, you can address the model with requests such as “Are you a journalist?” and “You are a journalist’s assistant who has…”

- Task (what to do).

- Format (how to present the result, the structure of the answer).

- Tone and length of the answer.

- Context (additional examples).

“I often ask: ‘Give me five ideas — from the most typical to the most fantastic. Give me five more ideas according to this principle.’ And almost always the second or third option turns out to be the most interesting,” shares Andrii Yurichko.

In addition, as Andrii Yurichko advises, you can make certain blanks of ‘prompts’ and ask the language model to write a prompt that you can then use in different language models. This is especially important when generating pictures.

“Never count on any one language model to fulfill all your whims and desires. It is better to mix them, see which one gives the best options, give one model’s answer to check with another, and so on. In fact, it doesn’t take that much time, it seems, but the quality of the text improves many times over,” says Andrii Yurichko.

Conclusion

Modern LLMs in journalism are about procedures, not about replacing authorship.

Where there is editorial policy, labeling, and a ‘person in the loop,’ language models provide speed and scale without undermining trust.

It is this kind of digitalization that we are developing in the NUJU course, Strengthening the Resilience of Frontline Media.

The project is funded by the Embassy of the Republic of Lithuania in Ukraine within the framework of the Development Cooperation and Democracy Promotion Programme.

THE NATIONAL UNION OF

JOURNALISTS OF UKRAINE

THE NATIONAL UNION OF

JOURNALISTS OF UKRAINE

Discussion about this post